The "Access-Competency" Paradox in Healthcare AI (and how I'm trying to solve it)

It's a circular dilemma: Healthcare professionals are denied access to the tools required to build AI literacy because they're not competent. They can't get competent without the tools.

Imagine telling a pilot they can only fly a plane once they have proven they can land one safely, but also refusing to let them use a flight simulator to learn how.

This is the current state of AI literacy in healthcare.

The new healthcare paradigm will expect clinicians and pharmacists to be the “humans in the loop” that audit healthcare AI models for safety and ensure any biases are corrected. Yet, hospital data governance creates a catch-22: no one can access the data until they’re an expert, yet no one can become an expert without having experience with the data.

I’m calling this the Access-Competency Paradox. If it’s not addressed on a systemic level, very soon, two bad things will happen:

The people who are interested in validating healthcare AI models get “locked out” of participating, and

Those who do have access to the models won’t be fully prepared for making decisions that affect real patients and clinicians.

I am creating a “flight simulator” for healthcare AI model validation - where healthcare professionals, students, and anyone else can gain experience with addressing AI model bias in a clinical setting without having to worry about patient confidentiality issues.

The Access Issue - The Fortress of PHI

In the world of healthcare, patient data is treated like radioactive material: it is incredibly powerful, but handled with extreme caution because a "leak" can be catastrophic.

Because of strict privacy laws (like HIPAA), hospitals lock their data inside a digital fortress. To get inside, you typically need to be treating a specific patient right now, or you need to be a specialized researcher with months of security clearance.

This system is designed to protect your privacy as a patient, which is good, but it creates a massive barrier for education. A pharmacist or doctor who wants to learn how to audit an AI system isn’t allowed to just "browse" patient records to practice. They are effectively locked out of the library, meaning they can’t access the raw materials they need to understand how these new AI tools actually work in the real world.

The Competency Issue - Theory is Not Experience

This lack of access creates a dangerous skills gap. Think of it like a mechanic who has studied every diagram of a car engine but has never been allowed to pop the hood because the car is considered "too expensive" to risk a scratch.

They might know the theory of how an engine runs, but they have never held a wrench, felt a bolt strip, or heard the specific rattle that means a part is loose.

Right now, because of data restrictions, we are effectively asking these "theoretical mechanics" to repair a high-speed engine (the AI) while it is moving down the highway. Without the ability to practice on a "junk car" (synthetic data) first, they are unprepared to handle the messy reality of a breakdown.

The Solution - A Flight Simulator for Healthcare AI

To solve the paradox of the “Theoretical Mechanic,” I built the Seismometer Flight Simulator.

If we cannot give learners access to real patients to practice on, we have to bring the practice to them. This project creates a digital “sandbox” - a safe, contained environment where pharmacists and clinicians can get their hands dirty with AI auditing without risking a single byte of real patient privacy.

The simulator works by combining three key technologies into one cohesive experience:

The Synthetic Patients (Synthea): First, we need data. Since we can’t use real medical records, I used a tool called Synthea to generate thousands of “synthetic” patients. These aren’t real people, but they look mathematically identical to real people. They have heart conditions, they take medications, and they have insurance histories. The data is high-fidelity enough to be run through an AI model but carries no risk of a privacy breach.

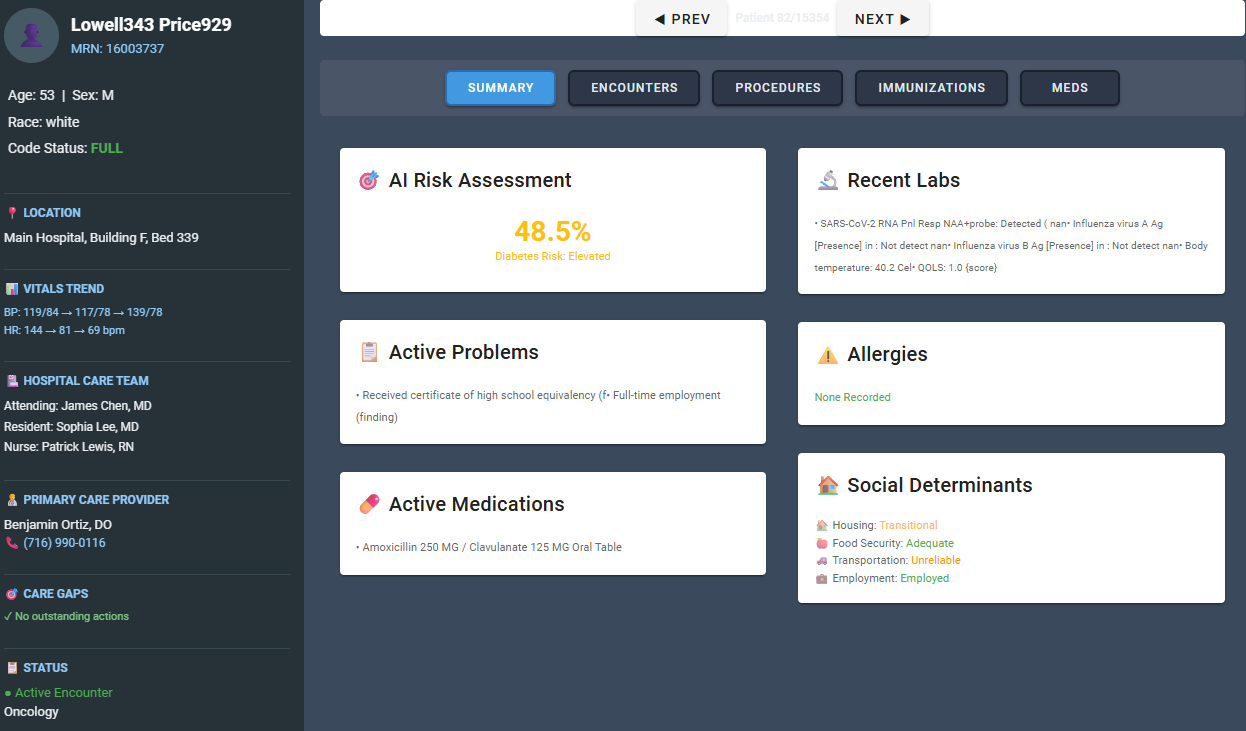

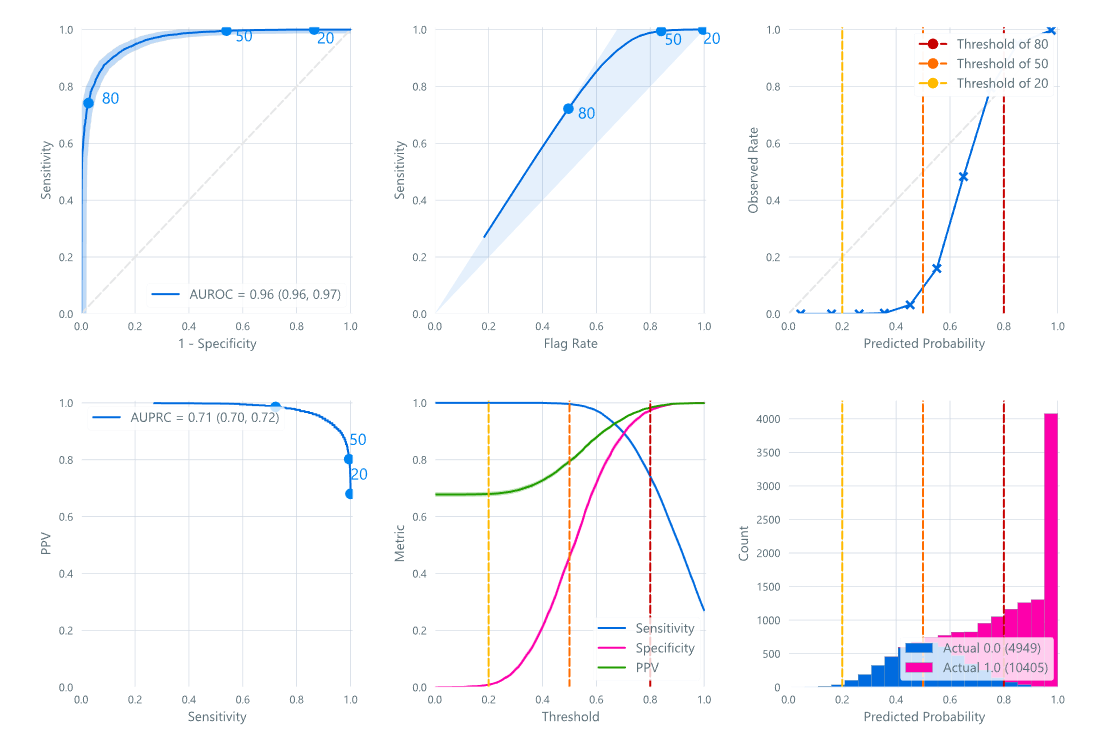

The Real Instruments (Epic Seismometer): Next, we need the tool. I integrated Seismometer, the actual software used by major hospital systems to check their AI models. Even though the patients are fake, the scanner we use to check them is real. This allows a user to learn the actual buttons, knobs, and warning lights they will see in a real hospital setting.

The Immersive Experience (Solara): Finally, I wrapped it all in a “Cockpit,” a dashboard built with Solara that simulates a real Electronic Health Record (EHR). It presents the user with an AI model that is behaving badly (maybe it’s biased against older patients, or it’s flagging healthy people as sick).

In its final form, the user will be able to run tests, tweak the sensitivity of the AI, and watch the model fail in real-time. They can mess up, misinterpret data, and “crash” the system over and over again. By the time they step into a real hospital to audit the actual AI models that affect real lives, they aren’t just working off theory anymore. They have muscle memory.

Conclusion: From Gatekeeping to Empowerment

We shouldn’t be withholding access to the tools of the future to the people who will one day be using them to keep patients safe. We need to move clinicians and pharmacists from the sidelines of the AI revolution to the front lines.

The Seismometer Flight Simulator is proof we don’t need to choose between patient privacy and professional preparedness. By combining synthetic “patient” data with real healthcare AI tools, we can democratize the experience of high-stakes model validation.

Patients will be able to count on the people who have already seen the warning lights, handled the failures, and fixed the biases before they ever touch a real patient’s chart.

Because this is too important not to get right the very first time.

Read how I use AI in my writing here: AI Use Policy

Read how I use analytics to improve my newsletter here: Privacy & Analytics

Domain expert here. You’ll need to get your data into an EHR. I don’t see any health industry protocols in your article. I know you may want to circumvent them, but they’re valuable and without them, this approach lacks credibility.

I read this like asking someone to train on a theoretical airplane simulator when they’re actually trying to get qualified on a 747. I can’t tell if you’re simulator is Ace combat 7 video game or something real.

Also concerns around privacy are very very real. I see people knocking HIPAA, but without HIPAA it would’ve been the wild wild West. I have seen someone fired for stealing x-rays of a quarterback‘s hand stolen so that that person could bet on a football game. The hand is the hand of a human being, personal data, and should be treated respectfully. There are other reasons hackers go after healthcare data.

Love that you’re simulating data. I’ve generated a lot of data simulations and it’s hard to get the data right, particularly when you don’t think about the particular use case ahead of time. It’s really hard to get good distributions on specific conditions without big example datasets. Also many of the healthcare companies are competing with that have data feeds also have agreements to be able to use the data for product improvement. We did. It’s invaluable for coming up with good simulated data.

Love this Ryan! A wonder of a patient version could also be on the cards so that they could better understand how their data is used and how to protect it as well. 🙏