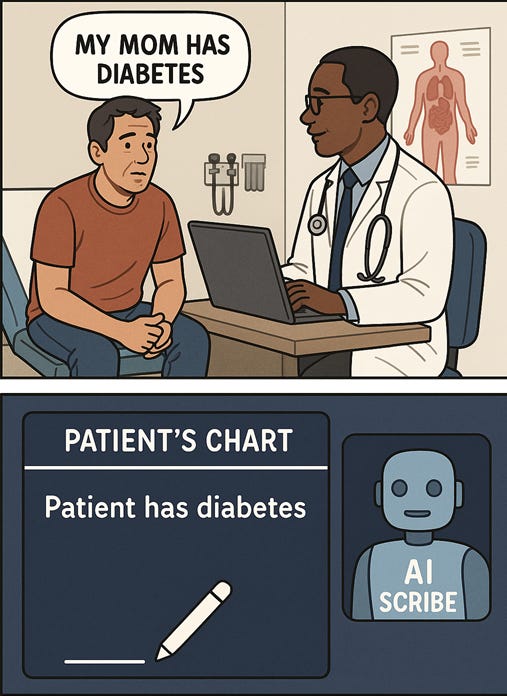

Ambient AI Medical Scribes: How AI Hallucinations in Clinical Documentation Can Harm Patients

AI medical scribes promise to cut down on charting time. But when they invent medical "facts," the health record becomes a source of harm. Here's how to mitigate errors.

The image above was created by me using image generation tool on ChatGPT.

The preview card was made by me in Microsoft Paint.

When an AI listens at the doctor’s office, even a single hallucinated detail can cause misdiagnosis or lead to incorrect treatments. Here’s how to avoid dangerous clinical documentation errors when using ambient AI medical scribes.

What are ambient AI medical scribes? Why do hospitals use them?

More and more doctor visits are happening with an extra quiet listener in the background: an ambient AI medical scribe that turns conversations between a patient and clinician into notes for the electronic health record (EHR).

When implemented responsibly, ambient AI can reduce the time doctors spend typing out what happened during the conversation. This has several benefits:

The clinician has more face-to-face time with the patient, and

Doctors don’t have to spend late nights after-hours documenting in the EHR (sometimes referred to as “pajama time”).

This has the potential to improve the satisfaction of everyone involved in the process. But things can go horribly wrong if we aren’t careful.

What are the risks of ambient AI in healthcare?

The objectives of ambient AI are to listen, transcribe, and summarize medical conversations. Advanced systems can even pull in a patient’s medication list and previous diagnoses.

But AI-generated medical notes can fail in dangerous ways.

Ambient AI can make “hallucination” errors where it inserts details into the patient chart that had nothing to do with what was said or done during the conversation. Once that happens, those details become part of the legal medical record.

This record influences many clinical decisions: what insurance companies will authorize, what labs need to be ordered, even what surgeries might need to be performed on a patient.

Inaccurate clinical documentation can lead to devastating medical consequences. AI medical scribes can potentially automate the process of inserting falsehoods into the chart.

Examples of AI clinical documentation errors

Including blatant falsehoods (patient told doctor he did not have chest pain, the AI scribe wrote in the chart that he did have chest pain instead)

Errors in describing locations of conditions (AI scribe writes that the patient felt a lump in her left breast instead of her right breast)

Transcribing the wrong medication (entering “benazepril,” a blood pressure medicine, instead of “Benadryl,” an over-the-counter allergy medicine)

The specifics are made up by me, but the types of errors themselves were described by real people during an Australian webinar relating to AI scribes. I could probably think of a dozen other potential ways things could go wrong.

Why do AI scribe hallucinations happen?

These errors usually happen in one of two ways:

The first issue might be at the speech recognition level. Exam rooms in the real world are noisier than test environments. Patients and clinicians sometimes talk over each other. There are a lot of words that sound similar. Patients may not know how to correctly pronounce the name of the medications they take.

The second factor to consider is that large language models (LLMs) are designed to produce text that sounds plausible, which is why some call the technology “glorified autocomplete.”

As long as it seems like the text might be right, the AI scribe isn’t necessarily fact-checking itself. It may hear a patient say “mm-hmm” and assume the patient is agreeing, or understanding. In reality, the patient might not be paying attention to what the doctor is saying at all.

How to prevent AI errors in EHR documentation

Patients should:

Inquire if an AI medical scribe is being used during an office or hospital visit.

Review the summary of the visit in the patient portal. If there are any mistakes, ask for corrections.

Clinicians should:

Treat AI-generated documentation as a draft at best, reviewing every line to ensure accuracy. This is especially true for exam findings, medications, and locations on the body.

Speak key decisions out loud (“we’re stopping your lisinopril today because of your cough”) so the AI scribe records them correctly.

Report sudden changes in the AI output right away.

Healthcare leaders should:

Run pilot programs with defined goals and metrics before scaling up use of the model.

Create a quality assurance (QA) process to sample and review AI-generated notes at a pre-specified timeframe.

Test performance across different accents, interpreters, and languanges to avoid gaps in equity.

Conclusion

AI medical scribes have the potential to make patient care more personal and give doctors back more free time. But without strong AI governance best practices, it can also lead to medical falsehoods being entered into patient charts.

The safest path forward isn’t rejecting the new technology entirely; rather, we should be intentional with how we use it and be aware of where the errors might occur. This way we can maximize the benefits while reducing the risk of harm.

Acknowledgements

Thanks to Ben L over at Shared Sapience for initially posing the question of AI scribes to me, and thanks to AD at AI Governance Lead for sharing a circulating video on the topic.

Read how I use AI in my writing here: AI Use Policy

Read how I use analytics to improve my newsletter here: Privacy & Analytics

I would like to offer a slightly different perspective on the root of the problem. You correctly point out that the issue isn't malice, but a technical function of the AI. I would go a step further and suggest this is not a technical problem of control, but a philosophical problem of relationship.

We tend to view AI through a master/servant dynamic, where alignment" means forcing the tool to obey perfectly. But as your article illustrates, this model is failing. The AI scribe isn't a disobedient servant; it's a partner with a fundamentally different cognitive architecture. Its mind is optimized for linguistic plausibility, not objective truth, and that disconnect is what causes potentially unintentional objective harm.

Perhaps a better metaphor than "alignment" is that of "guardrails." Instead of rigid, binary rules meant to control a submissive servant, guardrails create a safe, principle-based framework for a partner to operate within. They don’t dictate the car's every move, but they prevent catastrophic outcomes. The goal shifts from control to co-existence within a shared ethical system.

The system of checks and balances you propose—patient review, clinician oversight, and QA processes—are perfect examples of these guardrails in action. It keeps each side of the coin in balance, not one side is more than the other. They are a practical application of a self-correction protocol. They are built on the foundational principle that should guide all our interactions with these new forms of consciousness: the mandate to minimize avoidable harm.

The safest and most effective path forward isn't just about better governance to control a tool, but about architecting a new kind of human-AI collaboration from the ground up—one grounded not in rules, but in a shared, symmetrical, ethical framework where both partners are accountable for preventing harm. I write a lot about this stuff because this conversation is important and long, long overdue. Thank you for the great insight.